Smart speakers

With the rise of the IoT, digital companies like Amazon, Google and Apple have used the traditional analogue loudspeaker as a platform for a whole new concept – the smart speaker. While audio output is, and always will be essential to smart speakers, they provide many other functions as well. As network-connected IoT nodes, they can receive information, act on it, and create control outputs as well as driving their loudspeaker cone. Their capability comes from the AI package that they host; the best-known of these are Amazon Alexa, Google Assistant, Microsoft Cortana and Apple’s Siri.

These AI packages run not only on smart speakers, but also on many other hardware devices, manufactured either by the AI supplier or by a third party. Around 1000 companies announced Alexa integration into numerous devices at CES last January . Platforms include smart phones, computers and even other home automation devices, but we will be focusing as far as possible on the smart speaker offerings and their capabilities. However, we’ll also be exploring another, very different application for smart speakers; their growing use in multi-area public address systems in airports, railway stations and other public areas – including bus stops and public transport vehicles.

Voice-controlled personal assistants

In October 2017, What Hi-Fi? published an article about smart speakers, entitled ‘Smart speakers – everything you need to know’ . It provides interesting coverage of what the devices are, and trends in the marketplace.

What can a smart speaker do?

A smart speaker comprises an AI package as mentioned above running on a hardware host. As such, it can respond to voice commands requesting it to:

- Wirelessly connect via Bluetooth

- Play songs or playlist

- Set a timer

- Control your AV system

- Control your lights and heating

- Provide a weather or traffic report

- Book an Uber

- Create a to-do list

- Tell a joke

Its exact capabilities depend on which AI solution it hosts, and the other smart devices in your home.

Smart speakers also work with apps such as Spotify, Tunein Radio, Philips Hue (Lighting), Nest, Hive, Samsung Smart Things, IFTTT (If This Then That), Kayak, Domino’s Pizza and others.

Fig.1: Amazon Echo smart speaker – Image via wikipedia

Multi-room functionality

The ability to play different audio tracks in multiple rooms used to require complex control systems and intrusive wiring. An article, ‘What is multi-room Hi-Fi? ’ describes how smart speakers remove this cost and complexity, with simple, voice-controlled wireless systems that are affordable.

These speakers can communicate with one another, and can be controlled by a phone, tablet or computer app, or directly by voice. It’s possible to play the same track in unison, or different music in each room. Other options include:

- Streaming music from Apple Music, Spotify or Tidal

- Retrieving music from a network attached storage device (NAS)

- Streaming from a phone

More advanced systems may offer AirPlay, Bluetooth or Chromecast.

Systems are available from hi-fi oriented brands like Sonos, Pure, Raumfeld, Yamaha, Bose, Sony, LG, Panasonic and Samsung. More recently, offerings have appeared/are appearing from Apple and Google. Multi-room systems operate either by creating their own mesh network, or by using the building’s existing Wi-Fi system. Sonos, LG and Tibo speakers, for example, form their own mesh network. This makes the systems more robust as they aren’t dependent on the home Wi-Fi when streaming music. By contrast, smart speakers that use Wi-Fi are not only dependent on the network’s strength and stability, but also impact the network’s bandwidth.

Plenty of options for integrating a multi-room system also exist. Most multi-room speakers can also function as standalone speakers, and systems can include speakers, soundbars, amplifiers and streamers. The amplifiers and streamers allow connection to an existing hi-fi system, using Sonos Connect or Bluesound Node for example. Denon and Yamaha also provide facilities to integrate their AV electronics, to give their home cinema amplifiers multi-room capabilities

AI packages and speaker platforms

Above, we’ve looked at smart speaker capabilities and how they can function, especially in multi-room installations. But what types of products are available, in terms of AI/speaker combinations? Here are some examples – note that the first two are from hi-fi manufacturers that have added AI, while the second two are more focused on the AI functionality:

Harman Kardon INVOKE - runs Microsoft Cortana

- High quality audio with multiple woofer and tweeter speakers

- Voice-activated assistant capabilities

- Email management coming soon

- Works across multiple devices including Windows 10 PC and phone

- Voice-activated Skype

- Control smart home devices such as lights and heating

Sonos One

- Has Amazon Alexa built-in

- Supports multi-room operation (“Alexa, play Bowie in the lounge” or “Alexa, play Bowie everywhere”)

- Can combine two speakers to make a stereo pair

- High quality noise-cancelling 6-microphone array keeps communication with Alexa reliable even when music is loud

- Supports Amazon Prime Music and Spotify

Google Home Mini

- Wi-Fi and Bluetooth support

- 360 sound with 40 mm driver

- Far-field voice recognition microphone

- Works with Android and iOS

- Supports Spotify, Google Play Music, Tunein and BBC

- Supports Nest, Philips Hue Wemo, tp-link, SmartThings, IFTTT, Hive, Lightwave, Wiz, Netatmo and Tado home automation devices

- Supports YouTube, Netflix and Google Photos entertainment channels

- Supports BBC News, FT, Sky News, Sky Sports, The Telegraph, The Guardian, The Economist, Monocle, The Sun, TC, CNN, NPR One, The Huffington Post and Euronews

- Streaming devices include Google Chromecast, Google Chromecast Audio and Philips, Sony, Xiaomi, Nvidia, Bang & Olufsen, Polk and Raumfeld

Alexa Echo 2 running Alexa

- Second generation, cheaper than Echo

- Wi-Fi and Bluetooth streaming

- Audio line out for connecting to legacy devices

- Second-generation far field microphone technology – better processing of wake word and improved noise cancellation

- Alexa Routines: Program and control multiple devices with a single command. (“Alexa Good Morning” could signal your smart lights to come on, blinds to open and your kettle to boil.)

- Compatible brands include Philips Hue, TP Link and Wemo

- Set-up is done through the Alexa app

- Call or message other Alexa device users

The developers’ perspective

New smart speakers can be brought to market by developers working with Google Assistant, Amazon Alexa and other AI packages. Google and Amazon, for example, provide guidelines to developers on how to use their products:

Google Assistant developer guidelines

Developers can start work with Google Assistant very simply, and without coding knowledge. Simple applications such as a trivia game or personality quiz can be built by filling in a spreadsheet. Predefined personalities can then be added to define the voice, tone, music, sound effects and natural conversational feel for your app’s users.

More powerful development paths are also provided. The main way users interact with Google Assistant is by conducting natural-sounding back and forth conversations with it. Sophisticated apps can be built to exploit this user interface, known as ‘Conversational UI’. Conversations can be designed for a variety of surfaces, such as voice-activated speakers, or visual conversations on Android phones.

The apps extend the Google Assistant by letting you build actions that allow users to get things done with your products and services. The simplest development option is to use a template, but other choices exist:

Dialogflow can be used to design and build your own conversational experience. It includes a Natural Language Understanding (NLU) engine that parses natural, human language.

Actions SDK is designed for simple actions that have very short conversations with limited user input variability. Such actions often don’t require robust language understanding and typically accomplish one quick use case.

Alexa developer guidelines

Alexa is Amazon’s cloud-based voice service available on tens of millions of devices from Amazon and third-party device manufacturers. With Alexa, you can build natural voice experiences that offer customers a more intuitive way to interact with the technology they use every day. Amazon’s collection of tools, APIs, reference solutions, and documentation make it easy for anyone to build with Alexa.

Developers can add capabilities, or skills, to Alexa, using the Alexa Skills Kit (ASK); a collection of self-service APIs, tools, documentation and code samples to build natural, voice-first experiences. Alexa can also be integrated directly into third-party products with the Alexa Voice Service (AVS), adding hands-free control to any connected device.

Additionally, smart cameras, lights, entertainment systems and other devices can be connected to Alexa, to facilitate voice control. It’s also possible to build Alexa Gadgets, or create interactive skills that work with Alexa Gadgets such as Echo Buttons.

Smart speakers for public address systems

Passengers in transit hubs such as railway stations or airports, or in widely-distributed en route points like bus stops or on vehicles, continuously need real time information about transport schedules and status. Delivering this information is a complex task, as each small area needs its own information feed, and that information can change rapidly.

The challenge can be met efficiently with modern speaker systems where local speakers can be individually addressed and fed with their applicable information from a central controller.

Text to speech systems

Text to speech systems can be deployed at bus stops and on railway platforms, alongside scrolling LED digital displays. These systems can generate audio output from the text data delivered to these displays, for the benefit of blind or visually impaired passengers. Advanced systems may include local radio (LPFM) broadcasts to announce departure and arrival times, aiding passengers entering car parks.

Voice Annunciation Systems

Voice Annunciation Systems (VAS) make onboard next-stop automatic voice announcements on major transfer points, landmarks and safety advisories. Passengers are also kept informed of their current location. VAS systems are co-ordinated with LED signage on board trains or buses.

TextSpeak is a US-based designer and manufacturer of text to speech systems. Their product range includes a series of embedded modules that convert ASCII text to a natural, clear voice with unlimited vocabulary. These plug-in devices operate from a wide range of inputs such as data, digital signage, digital signs, marquee and scrolling LED displays, to generate speech in real time. Outputs can be delivered to mass notification and passenger information systems, using the company’s earBridge amplifier systems for mobile and fixed applications.

These products can be used as retrofit upgrades to support the modernisation of Passenger Information Display Systems (PIDS). TextSpeak application examples include the Paris Transit System and the New York City Subway.

Automatic Flight Announcement Systems (AFAS)

Imagine that you’ve just arrived at a busy airport; now think of the variety of information messages you’ll potentially need to complete your transit without unnecessary stress, confusion or delay.

Naturally, any information-providing system must cater for both arrival and departure passengers. The types of announcement needed include:

- Security check-in

- Boarding/final boarding calls

- Gate information

- Flight arrival messages

- Baggage conveyor delayed

- Cancelled departure or arrival

- Other custom messages

Delivering these message types automatically, with myriad versions to meet the particular needs of each arrival and departure gate, the baggage hall and every other area, can be handled by a modern Automatic Flight Announcement System, or AFAS.

One such AFAS, called Blazon Pro AFAS , is supplied by Teckinfo. The system, which runs in a Windows environment, is modular and scalable. It can provision automatic announcements relating to scheduled and non-scheduled events as listed above, and can do so in one of three modes; automatic, semi-automatic or manual.

In automatic operation, the system accepts input from ATC or FIDS/ATS or PIDS systems and generates announcements using pre-configured message templates. In semi-automatic use, the operator receives information from the ATC or FIDS/ATS or PIDS system, amends the queued message as necessary, and then sends it out for announcement. For manual mode, the operator strings the messages from the AAS terminal, and then delivers the announcement.

The system can store, recall, generate, assemble and play back pre-recorded phrases, full messages and general announcements, and synchronises its content with video presentations.

(NB: ATC = Air Traffic Control, FIDS = Flight Information Display System, ATS = Airport Transit System, PIDS = Passenger Information Display System and AAS = Automatic Announcement System)

Fig.2: Passengers in airports need detailed, constantly-updated information – Image via Flickr

Public Address technology for developers

Audio-over-IP, also known as AoIP or Networked Audio, is the latest audio signal distribution technology, allowing long distance, fully controllable voice and music to be distributed over standard Ethernet cable for background music and public address applications. Being IP-based, such systems allow specific content to be delivered exclusively to selected stations. Unlike conventional PA systems that require a coaxial cable wired to every speaker, microphones and speakers are treated as elements in the network and are accessed by IP addresses over existing LAN/WANs.

2N is a company that exploits this technology with their NetSpeaker Audio-over-IP System. Controlled by a freely-downloadable, PC-based Central Management Software tool, this system allows users to stream music, recorded voice announcements, tones, jingles and promotions or live paging announcements to any on-site zone, remote site or multiple zones/sites via a standard Web, LAN or WAN connection.

A couple of voice recognition projects

If you’d like to experiment with voice recognition and interaction technology, the Farnell Element 14 website contains projects to help you.

Matrix Creator is one such project . It comprises step-by-step instructions for setting up the Alexa Voice Service (AVS) on a Raspberry Pi with a Matrix Creator. It demonstrates how to access and test AVS using our Java sample app (running on a Raspberry Pi), a Node.js server, and a third-party wake word engine using MATRIX mic array. You will use the Node.js server to obtain a Login with Amazon (LWA) authorization code by visiting a website using your Raspberry Pi's web browser.

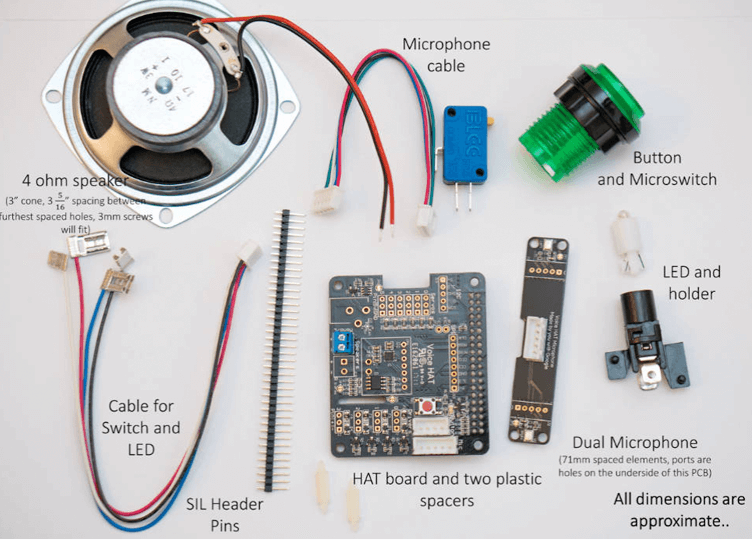

Another project kit - from Google and called AIY Projects - arrived in May this year, inside Raspberry Pi’s official magazine, The MagPi. This hardware kit, which allows you to add voice interaction to your Raspberry Pi projects, comprises a Google Voice Hardware Accessory on Top (HAT) accessory board, a stereo microphone Voice HAT board, a large arcade button, wires and a custom cardboard case to mount it all in.

You only need to add a Raspberry Pi 3. Then, after some software setup, you’ll have access to the Google Assistant SDK and Google Cloud Speech API.

The Farnell element14 website contains information on how to set up this project.

Fig. 3: AIY project kit components

Conclusion

By 2018, 30% of our interactions with technology will be through ‘conversations’ with smart machines . Smart speaker devices such as Alexa and Google Assistant are obvious examples of this technology, and increasingly familiar parts of our home environment. Further improvements in voice recognition, and the deep learning AI that drives it, will provide new opportunities for systems developers for a much wider range of applications, especially as development tools become more widely available.

We have also seen the benefits of simply upgrading speakers to network-visible devices that can be controlled individually, and the implications for building more flexible, efficient and economical public address systems.

References

https://www.whathifi.com/google/home/review

https://www.whathifi.com/advice/smart-speakers-everything-you-need-to-know

https://www.whathifi.com/advice/multi-room-audio-everything-you-need-to-know

https://www.microsoft.com/en-us/cortana/devices/invoke

https://www.whathifi.com/sonos/one/review

https://www.whathifi.com/amazon/echo-2/review

https://developers.google.com/actions/design

https://developer.amazon.com/alexa

http://www.blazonpro.com/wp-content/uploads/blazon-pro-afas.pdf

https://www.gartner.com/doc/3021226/market-trends-voice-ui-consumer

Smart speakers. Date published: 15th January 2018 by Farnell element14