Machine Learning for Embedded Systems

The arrival of the online interactive services such as Apple’s Siri and Amazon Echo in the home alerted consumers to the rise of artificial intelligence (AI) and machine learning. Although the services are deployed through a variety of embedded systems, the core AI algorithms need to run in the cloud. But this is not a long-term solution for the many applications of machine learning that future embedded systems will provide.

Self-driving cars are learning how to deal with obstacles through examples presented to them in simulations and real-world driving conditions. Similarly, robots can now be guided by operators to perform tasks instead of being programmed laboriously by hand. Although these systems could call on the cloud for some services, the core machine-learning algorithms will need to run locally because they cannot afford the latency that sending data to the cloud incurs.

Many systems will simply not have convenient access to the cloud. For example, drilling systems needed for resource extraction are often in remote locations far away from high-speed wireless infrastructure. But the equipment operators are beginning to demand the power of machine learning to monitor more closely what is happening to machinery and watch for signs of trouble. Conventional closed-loop algorithms often lack the flexibility to deal with the many potential sources of failure and interactions with the environment in such systems. By using machine learning to train systems on the behaviour of real-world interactions it is possible to build in greater reliability.

Surveillance is another sector where local processing will need to be used to identify immediate threats but may call on the cloud to perform additional processing and lookups in online databases to confirm whether further action needs to be taken. Even the wireless communications links used to relay the information are beginning to make use of machine learning. For example, the parameters that control 5G wireless modems are so complex some implementors have turned to machine learning to tune their performance based on experience in the field.

The range of applications for machine learning is mirrored by the number of approaches that are available. Today, much of the focus in cloud-based artificial intelligence is on the deep neural network (DNN) architecture. The DNN marked the point at which neural-network concepts became practical for image and audio classification and recognition, with later variants such as adversarial and recurrent DNNs becoming important for real-time control tasks.

Originally, neural networks employed a relatively fairly flat structure, with no more than one ‘hidden’ layers between input and output layers of simulated neurons. In the mid-2000s, researchers developed more efficient techniques for training. Although the process remained computationally intensive it became possible to increase the number of hidden layers – sometimes to hundreds – and with that the complexity of the data the neural networks could handle.

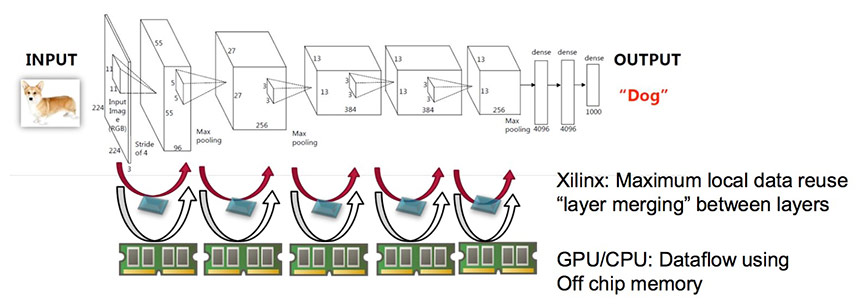

Fig.1: Low latency Interference by Layer Dataflow On Chip

The nature of the layers themselves changed with the introduction of convolutional layers and pooling layers. These are not fully connected in the way that the layers in the original neural networks were. These layers perform localised filtering and collation of inputs that are useful for extracting high-level features and performing dimension reduction that lets the DNN perform classifications on highly complex inputs.

There are many more types of machine-learning algorithm. Some systems may make use of more than one technique at once. One current direction in R&D for machine-learning in high-dependability systems is to use a second algorithm for backup. Since their introduction, concerns have grown over the reliability of DNNs under difficult conditions and the susceptibility of DNNs to adversarial examples. These are deliberately altered inputs that have caused trained systems to ignore or misread road signs, for example. Simpler algorithms that have more predictable behaviour, even if they are less capable, can act as backups to prevent unwanted behaviour at the system level.

Before DNNs became feasible, the support vector machine (SVM) was commonly used in image- and signal-classification tasks. The ability of DNNs to handle highly complex, high-resolution inputs made many SVM implementations redundant. But for data with lower dimensionality the SVM can still be effective, especially for control systems that have chaotic dynamics. The SVM takes its data as a set of multi-dimensional vectors and attempts to define a hyperplane between related clusters of data.

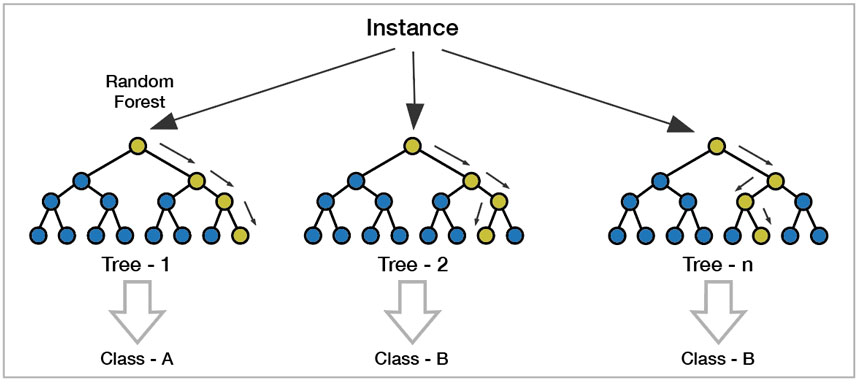

Since the earliest experiments into artificial intelligence, decision trees have been important. Originally, these were hand-crafted using expert input to define rules that a machine could use to determine how to process data. The random forest combines the concept of decision trees with machine learning. The algorithm works by creating many decision trees from the training data, taking an average of the output – which may be a mode for classification tasks or a mean for regression applications – and outputting that single value.

Fig.2: Decision trees from the training data – Image via Premier Farnell

Gaussian processes provide a further tool for machine learning and have been proposed as backups for DNNs because, being based on probability theory, they provide not just estimates based on training data but an idea of the confidence in the prediction. Using that information, downstream algorithms can choose to be more cautious about the output from the machine-learning systems and take appropriate action.

A key issue for the implementation on embedded systems of machine learning for all but the simplest techniques is that of performance. Key to the adoption of DNNs by the research community and then by the major cloud-computing operators was the way in which the processing could be accelerated by the shader engines provided by high-end graphics processing units (GPUs). By providing multiple floating-point engines in parallel, GPUs provided much higher throughput for typical DNN workloads, for both training and inferencing. For the embedded space, suppliers such as nVidia, with their Tegra X1, and NXP, through the i.MX family provide GPUs that are able to run DNN and similar arithmetically intensive workloads.

A concern for users of GPUs for machine learning is that of energy consumption. The relatively small local memories to which shader cores have access can lead to high rates of data exchange with main memory. To help deal with the memory demands, operators of cloud servers are looking to customised DNN processors that are designed to work with the memory-access patterns of DNNs and so reduce the energy consumed in accesses to offchip DRAM. An example is Google’s Tensorflow Processing Unit (TPU). Similar custom processors are now being introduced for embedded systems processors. An example is the machine-learning processor in Qualcomm’s SnapDragon platform.

Dedicated DNN engines take advantage of optimisations that are possible for typical embedded applications. Typically, training involves many more calculations than inferencing. As a result, an increasingly common approach to machine learning in embedded systems is to hand off training to cloud-based servers and only perform inferencing on the embedded hardware.

Researchers have found that the full resolution of floating-point arithmetic provided by GPUs and general-purpose processors are not required for many inferencing tasks. The performance of many DNNs has been shown to be only marginally reduced by the use of approximation. This makes it possible to reduce the arithmetic resolution to 8 bits or even lower. Some DNNs even operate with neuronal weights that can take only two or three discrete values.

A further optimisation is network pruning. This uses analysis of the post-training DNN to strip out connections between neurons that have little to no influence on the output. Removing their connections avoids performing unnecessary calculations and, as a further benefit, greatly reduces the energy consumed by memory accesses.

The field-programmable array (FPGA) is well positioned to take advantage of approximate-computing and similar optimisations for inferencing. Although Arm and other microprocessor implementations now have single-instruction multiple-data (SIMD) pipelines that are optimised for low-resolution integer data, down to 8bit, the FPGA allow bit resolution to be tuned at a fine-grained level. According to Xilinx, reducing precision from 8bit to binary reduces FPGA lookup table (LUT) usage by 40 times.

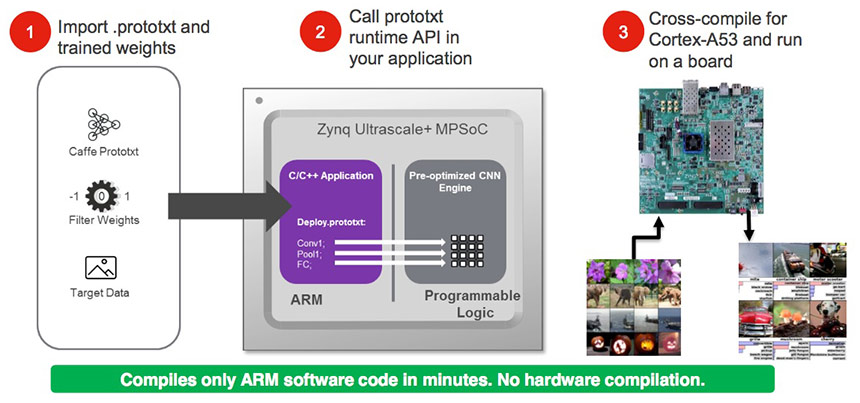

Platforms such as the Ultra96board make it possible to split processing across a general-purpose processor and a programmable-logic fabric with support for close interactions between the two. Based on the Xilinx Zynq UltraScale+ MPSoC architecture, such a platform enables the easy deployment of complex DNN architectures that make use of special-purpose layers. The FPGA fabric inside the MPSoC can handle convolutional and pooling layers, as well as thresholding functions, while the Zynq’s Arm processor, running a Linux-based operating system and supporting software, manages dataflow and executes layers that have less predictable memory access patterns.

Within the FPGA fabric of a platform such as the Ultra96board, developers can call upon the programmable DSP slices, which can easily be configured to act as dual 8bit integer processors, as well as the lookup-table logic to implement binary or ternary processing. A key advantage of the use of programmable hardware is the ability to perform layer merging. The arithmetic units can be interconnected directly or through block RAMs so that data produced by each layer is forwarded without using external memory as a buffer. Although machine-learning algorithms can be complex to create, the research community has embraced concepts such as open source. The result is that there are a number of freely available packages that developers can call upon to implement systems, with the caveat that most were developed for use on server platforms. Vendors such as Xilinx have made it possible for users of their hardware to port DNN implementations to FPGA. The Xilinx ML Suite, for example, can take a Caffe DNN model that has been trained in the desktop or server environment and port it to a Zynq-based target. The translator splits processing between custom code compiled for the Arm processor and a pre-optimised convolutional neural network loaded into the FPGA fabric.

Fig.3: xFdnn: Direct Deep Learning Inference from Caffe

For non-DNN machine-learning workloads, developers can gain access to a wide variety of algorithms, including SVM and random-forest implementations, through open-source libraries. Most of these libraries are intended for the Python programming language rather than the C/C++ generally favoured for embedded-systems development. However, the growing popularity of Linux makes it relatively straightforward to load a Python runtime onto the target and employ them on an embedded-systems target.

Thanks to the combination of higher-performance hardware and the availability of tools that allow prototyping, machine learning is becoming a reality for embedded systems.

Machine Learning for Embedded Systems - Date published: 15th October 2018 by Farnell element14